We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Machine learning interviews are pivotal for aspiring data scientists and AI enthusiasts seeking to showcase their expertise and secure rewarding opportunities. This comprehensive blog presents the "Top 35 Machine Learning Interview Questions" categorised into four segments: basic, intermediate, advanced, and situation based.

This blog can aid you greatly if you wish to cover a wide spectrum of ML topics for a future interview. This blog provides valuable insights and strategies to excel in machine learning interviews. Check out this blog on Machine Learning Interview Questions and answers that will become a valuable resource in preparing to succeed in your upcoming interview.

Table of Contents

1) Basic Machine Learning Interview Questions

2) Intermediate Machine Learning Interview Questions

3) Advanced Machine Learning Interview Questions

4) Situation-based Machine Learning Questions

5) Conclusion

Basic Machine Learning Interview Questions

Basic questions cover essential ML concepts, including supervised and unsupervised learning, overfitting, evaluation metrics, and handling missing data. Interviewers use these questions to assess candidates' foundational knowledge and their ability to explain fundamental ML principles and techniques concisely.

Explore the possibilities of AI with our Artificial Intelligence & Machine Learning courses!

1) Define machine learning and its primary types.

Machine learning is a specialised field of Artificial Intelligence (AI) that focuses on algorithm and model development. This development enables computers to learn from data to make decisions without being explicitly programmed to do so, thus replicating human decisions. As of now, ML can be divided into three categories:

a) Supervised Learning: In this type, the algorithm is trained on labelled data, where the input and the corresponding output are provided. A supervised model learns to map inputs with the outputs, which allows it to make accurate predictions on unseen data.

b) Unsupervised Learning: Here, the algorithm is trained on unlabeled data, and it learns patterns and structures within the data without specific guidance. Clustering and dimensionality reduction are common tasks in unsupervised learning.

c) Reinforcement Learning: This learning model involves an agent's intervention; this agent interacts with an environment and learns to achieve a goal through rewards and penalties. The agent learns from trial and error, optimising its actions to maximise rewards over time.

2) What's the difference between supervised and unsupervised learning?

The core difference between supervised and unsupervised learning lies in the presence of labelled data. In a supervised learning model, an algorithm is provided with labelled input-output pairs during training, allowing it to learn patterns and make accurate predictions. On the other hand, unsupervised learning deals with unlabelled data, where the algorithm tries to discover underlying patterns and relationships without explicit output labels.

3) Explain the bias-variance tradeoff in Machine Learning.

The bias-variance tradeoff is a basic ML concept in machine learning that addresses the balance between two sources of error:

a) Bias: It represents an introduced error by approximating a real-world problem with a simplified data model. High bias can lead to underfitting, where the model is too simplistic to capture the underlying patterns in the data.

b) Variance: It refers to the error introduced due to the model's sensitivity to small fluctuations in the training data. High variance can lead to the model performing well on the training data but poorly on unseen data. This issue is commonly regarded as overfitting.

4) Describe popular machine learning algorithms like Decision Trees and K-Nearest Neighbors.

a) Decision trees: Decision trees are a widely used supervised learning algorithm for both classification and regression tasks. They work by recursively splitting the data based on features to create a tree-like structure, where each node present internally represents a decision based on a feature. Every leaf node under this represents a predicted outcome.

b) K-Nearest Neighbors (KNN): KNN is a simple yet effective algorithm used for classification and regression tasks. In KNN, the output is determined by the majority class (for classification) or the average of the K-nearest data points (for regression) in the feature space.

5) How do you handle missing data in a dataset during model training?

Dealing with missing data is crucial to ensure the accuracy and robustness of a machine learning model. Common approaches to handling missing data include:

a) Removal: If the amount of missing data is small, the rows or columns containing missing values can be removed. However, this approach may lead to the loss of valuable information.

b) Imputation: This involves filling in the missing values with estimated or substituted values. Simple imputation methods include mean, median, or mode imputation. More sophisticated techniques like K-nearest neighbours' imputation or regression imputation can also be used.

c) Using special values: Sometimes, missing values can be represented by a special value (e.g., -1 or NaN), which the model can interpret accordingly.

6) What is overfitting, and how can it be prevented?

Overfitting occurs when a model becomes too complex and starts to memorise the training data instead of generalising it to unseen data. This leads to poor performance on new data. To prevent overfitting:

a) Cross-validation: Use cross-validation techniques to gauge the model's overall performance on different subsets of the data. This helps in identifying if the model is overfitting.

b) Regularisation: Introduce regularisation techniques like L1 or L2 regularisation, which penalise complex models and encourage simpler ones.

c) Feature selection: Select only the most relevant features for model training to reduce complexity.

d) Early stopping: Monitor the model's performance on a validation set and stop training when the performance starts to degrade.

7) Discuss the ROC curve and its significance in classification models.

The Receiver Operating Characteristic (ROC) curve is a graphical representation which can illustrate the performance of a binary classification model at various classification thresholds. It plots the true positive rate (sensitivity) against the false positive rate (1-specificity). The ROC curve is important because:

a) It allows model comparison: ROC curves of different data models can be tallied, and the model which has the highest area under the curve (AUC) is considered better.

b) It visualises the tradeoff: The ROC curve shows the tradeoff between the true positive rate and the false positive rate at different classification thresholds. These rates help in selecting an appropriate threshold based on the application's requirements.

c) It demonstrates model robustness: The closer the ROC curve is to the upper-left corner, the more robust the model is.

8) What evaluation metrics would you use for regression models?

For regression models, various evaluation metrics can be used to assess their performance. Some common metrics include:

a) Mean Absolute Error (MAE): It measures the average absolute difference between the previously predicted values and the actual values.

b) Mean Squared Error (MSE): It measures the average squared difference between actual values and the previously predicted values, giving more weight to larger errors.

c) Root Mean Squared Error (RMSE): It is the square root of MSE and provides a metric in the same unit as the target variable.

d) R-squared (R²): It represents the variance proportion in the target variable explained by the model. Higher R² indicates a better-fitted model.

9) Differentiate between feature selection and feature extraction.

a) Feature selection: Feature selection involves choosing a subset of the most relevant features from the original set. It helps in simplifying the model, reducing computation time, and improving model interpretability.

b) Feature extraction: Feature extraction, on the other hand, creates new features from the original set or transforms them into a lower-dimensional space. Techniques like Principal Component Analysis (PCA) and Singular Value Decomposition (SVD) are common examples of feature extraction methods.

10) Explain the working of cross-validation in model assessment.

Cross-validation is a resampling technique used to assess a model's performance by partitioning the data into subsets:

a) K-Fold cross-validation: The data is divided into K equal-sized folds. This model is trained on the K-1 folds but validated on the remaining fold. This process is repeated K number of times, and the performance metrics are averaged to get the final evaluation.

b) Leave-One-Out Cross-Validation (LOOCV): Each data point is used as a validation set once, and the rest of the data is used for training. LOOCV is computationally expensive but provides a more unbiased estimate of performance.

Learn more with our Introduction to Artificial Intelligence Training course today!

Intermediate Machine Learning Interview Questions

Intermediate questions explore intermediate-level ML topics such as Support Vector Machines (SVM), ensemble learning, LSTM networks, and feature selection. Interviewers aim to evaluate candidates' proficiency in applying ML algorithms to solve more complex problems and their ability to explain these concepts in detail.

11) Detail the working of Support Vector Machines (SVM) and their kernel functions.

Support Vector Machines (SVM) are powerful supervised learning algorithms used for classification and regression tasks. The working of SVM involves finding the optimal hyperplane that best separates different classes in the data. The hyperplane is selected such that it maximises the margin between the two classes, and data points closest proximity hyperplane are called support vectors.

SVM can handle both linearly separable and non-linearly separable data by using kernel functions. Kernel functions transform the original feature space into a higher-dimensional space, making it possible to find a linear hyperplane in this transformed space, which corresponds to a non-linear decision-based boundary in the original space.

12) How does the Expectation-Maximisation (EM) algorithm work in unsupervised learning?

The Expectation-Maximisation (EM) algorithm is an iterative optimisation technique used in unsupervised learning, especially for models involving latent variables. EM is used to estimate the parameters of probabilistic models when some data is missing or unobserved. The algorithm alternates between two steps:

a) Expectation step (E-step): In this step, the expected value of the missing data is calculated based on the current model parameters.

b) Maximisation step (M-step): In this step, the model parameters are updated to maximise the likelihood of the observed data, incorporating the expected values of the missing data obtained in the E-step.

13) What are Recurrent Neural Networks (RNN), and where are they used?

Recurrent Neural Networks (RNN) are a type of neural network architecture which are designed to handle sequentially arranged data. Unlike feedforward neural networks, RNNs have loops that allow information to persist across time steps. This enables RNNs to capture temporal dependencies in data. They are widely used in tasks like natural language processing, speech recognition, time series prediction, and sentiment analysis.

However, traditional RNNs suffer from the vanishing gradient problem, limiting their ability to capture long-term dependencies. Advanced RNN variants like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) were developed to address this issue.

14) Discuss the advantages and limitations of ensemble learning methods.

Ensemble learning methods combine multiple base models to make predictions, and they often outperform individual models. The advantages of ensemble learning include improved accuracy, robustness against overfitting, and the ability to handle complex relationships in data.

Ensemble methods such as the Random Forest or Gradient Boosting are widely used. However, ensemble learning also has limitations such as increased computation time, difficulty in interpretation, and the risk of model redundancy if base models are highly correlated.

15) Explain Principal Component Analysis (PCA) and its applications in dimensionality reduction.

PCA is a popular unsupervised learning technique used for dimensionality reduction. It transforms the original feature space into a new, lower-dimensional space while retaining the most important information from the original data.

PCA achieves this by finding orthogonal axes (principal components) that notice the maximum variance in the data. Applications of PCA include data visualisation, noise reduction, and feature extraction to speed up machine learning algorithms and reduce overfitting by removing less relevant features.

16) How can you deal with imbalanced datasets during model training?

Dealing with imbalanced datasets is crucial, as most models tend to be extremely biased towards the majority class. Some strategies to handle imbalanced datasets are:

a) Resampling: Either oversample the minority class or undersample the majority class to balance the class distribution.

b) Synthetic data generation: Use techniques like Synthetic Minority Over-sampling Technique (SMOTE) to generate synthetic samples of the minority class.

c) Class weights: Assign higher weights to the minority class during model training to give it more importance.

d) Anomaly detection: Treat the imbalanced class as an anomaly detection problem.

17) Describe the working of the backpropagation algorithm in neural networks.

Backpropagation is a widely used algorithm to train neural networks. It involves two phases: forward propagation and backward propagation.

a) Forward propagation: During this phase, the input data is fed into the neural network, and computations are performed layer by layer. Each neuron's output is calculated using activation functions and passed to the subsequent layers until the final output is obtained.

b) Backward propagation: In this phase, the difference between the actual target and the predicted output is calculated (the loss function). The gradients of the loss function with respect to the model's parameters are computed. The gradients found using the chain rule are then used to update the model's parameters through optimisation algorithms like gradient descent, minimising the loss and improving the model's performance.

18) What are some regularisation techniques, and why are they necessary?

Regularisation techniques prevent overfitting in machine learning models and improve generalisation to unseen data. Common regularisation techniques include:

a) L1 Regularisation (Lasso): It adds the absolute value of the coefficients as a penalty term to the loss function, encouraging sparsity in the model.

b) L2 Regularisation (Ridge): It adds the squared value of the coefficients as a penalty term to the loss function, leading to smaller but non-zero coefficients.

c) Dropout: It randomly drops a fraction of neurons during training, reducing interdependence between neurons and preventing overfitting.

d) Early stopping: It stops the data model training process when the performance on a validation set starts to degrade, preventing overfitting on the training data.

19) Discuss the concept of hyperparameter tuning and its importance.

Hyperparameter tuning involves finding the best-case values for hyperparameters, which are parameters set before the training process begins and cannot be learned from the data. These hyperparameters significantly influence the model's performance.

Performing hyperparameter tuning is essential, as choosing inappropriate values can lead to poor model performance or overfitting. Techniques like grid search, random search, and Bayesian optimisation are used to explore the hyperparameter space and find the best combination of hyperparameter values for the model.

20) How do you handle the curse of dimensionality in machine learning?

The curse of dimensionality is a frequently used term in AI, which refers to the challenges posed by high-dimensional data. As the number of features increases, the data becomes sparse, and the model's performance may degrade. To handle the curse of dimensionality:

a) Feature selection: Choose only the most relevant features and eliminate redundant or irrelevant ones.

b) Feature extraction: Use techniques like PCA or autoencoders to transform the high-dimensional data into a lower-dimensional representation.

c) Regularisation: Regularisation techniques can help in feature selection and prevent overfitting.

d) Dimensionality reduction algorithms: Algorithms like t-SNE and UMAP are specialised for visualisation and clustering tasks in high-dimensional data.

Advanced Machine Learning Interview Questions

Advanced questions delve into cutting-edge ML topics like Generative Adversarial Networks (GANs), attention mechanisms, Boltzmann Machines, and Bayesian optimisation. Interviewers ask these questions to assess candidates' expertise in advanced ML techniques and their awareness of current trends in the field.

Try our Deep Learning Training course to learn the capabilities of Machine Learning!

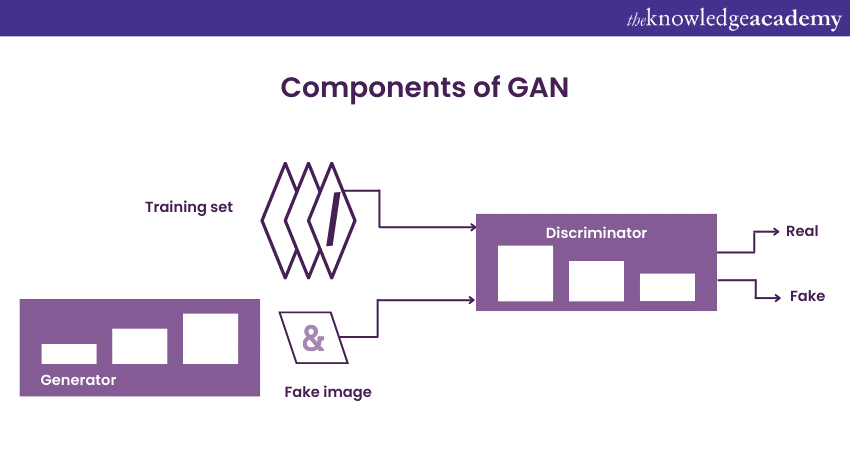

21) Explain Generative Adversarial Networks (GANs) and their applications.

Generative Adversarial Networks (GANs) are a type of deep learning model consisting of two neural networks: a generator and a discriminator. The generator aims to produce realistic data samples from random noise, while the discriminator's goal is to distinguish between real and generated samples. During training, the generator and discriminator play a minimax game, where the generator tries to deceive the discriminator, and the discriminator strives to correctly classify real and generated samples.

Applications of GANs include:

Image generation: GANs can generate realistic images, leading to applications in art, gaming, and data augmentation.

Style transfer: GANs can be used to apply the artistic aesthetic and style of one image to another.

Super-resolution: GANs can enhance the resolution and quality of images.

Data augmentation: GANs can generate synthetic data to augment training datasets and improve model generalisation.

22) What is transfer learning, and how can it be applied in deep learning models?

Transfer learning is a technique in which a neural network, which has been previously trained, is used as a starting point for a new, related task. Instead of training a deep learning model from scratch, transfer learning allows leveraging knowledge learned from one task to another. This is particularly useful when the new task has limited labelled data.

In transfer learning, the pre-trained model's initial layers, known as the feature extractor, are frozen to preserve the learned representations. The remaining layers are retrained on the new task using the available labelled data. This process allows the model to adapt quickly to the new task and often leads to better generalisation and faster convergence.

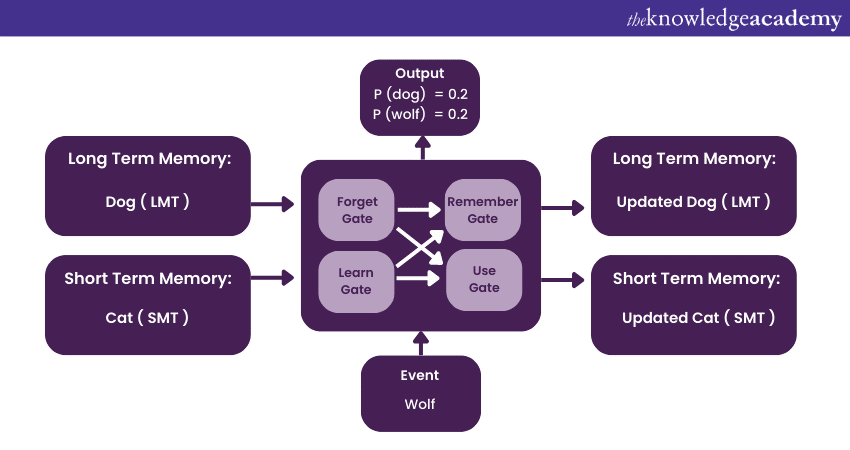

23) Discuss the working of the Long Short-Term Memory (LSTM) network.

Long Short-Term Memory (LSTM) is a variant of Recurrent Neural Networks (RNN) designed to handle the vanishing gradient problem, which is common in traditional RNNs. LSTM incorporates memory cells and three gates: the input gate, output gate, and forget gate.

a) Input gate: Controls how much new information is added to the memory cell.

b) Output gate: Controls how much information is output from the memory cell.

c) Forget gate: Controls how much information from the previous state is retained in the memory cell.

24) How does the attention mechanism work in Natural Language Processing (NLP)?

The attention mechanism is a crucial component in modern NLP models like Transformer-based architectures. It enables the model to focus on relevant parts of the input sequence while making predictions. In NLP tasks, the input sequence may contain vital context information, and traditional models treat all words equally.

The attention mechanism addresses this by computing attention weights for each word based on its importance concerning the output. These attention weights guide the model's focus, allowing it to pay more attention to relevant words and less attention to irrelevant ones. The attention mechanism has significantly improved the performance of NLP tasks like machine translation, text summarisation, and question-answering systems.

25) Describe the concept of Monte Carlo methods and their role in machine learning.

Monte Carlo methods are a specific category of computational algorithms. These algorithms use random sampling to obtain numerical results. In machine learning, Monte Carlo methods are employed for various purposes:

a) Estimation: They are used to estimate complex mathematical integrals, expectations, or probabilities that are difficult to solve analytically.

b) Bayesian inference: Monte Carlo methods, such as Markov Chain Monte Carlo (MCMC), are used to draw samples from posterior distributions in Bayesian models.

c) Reinforcement learning: In some reinforcement learning algorithms, like Monte Carlo Policy Evaluation, they are used to estimate state values or action values by sampling episodes.

d) Optimisation: Monte Carlo methods are employed in some optimisation algorithms, like Simulated Annealing, to explore the search space effectively.

26) Explain the difference between batch gradient descent and stochastic gradient descent.

Batch Gradient Descent (BGD) and Stochastic Gradient Descent (SGD) are optimisation algorithms used to minimise the cost (or loss) function during model training:

a) Batch Gradient Descent: In Batch Gradient Descent, the entire training dataset is used to calculate the gradient of the cost function. The model's parameters are then updated after processing the entire dataset. This method ensures stable convergence but can be computationally expensive for large datasets.

b) Stochastic Gradient Descent (SGD): In SGD, only one training sample is randomly selected and used to calculate the gradient at each iteration. The model parameters are updated immediately after processing each sample. SGD can converge faster due to more frequent parameter updates but may exhibit higher variance in the training process.

c) Mini-Batch Gradient Descent: Mini-batch gradient descent strikes a balance between batch gradient descent and SGD by using a small subset (mini-batch) of the training data to compute the gradient and update the parameters. It combines the advantages of both methods, offering faster convergence with reduced variance.

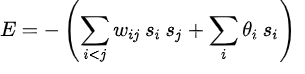

27) What are Boltzmann Machines, and how are they used in training deep networks?

Boltzmann Machines (BMs) are generative stochastic neural networks with visible and hidden units. They are used for feature learning, dimensionality reduction, and unsupervised pretraining of deep neural networks. They are also used to learn probability distributions over binary data. BMs consist of two types: Restricted Boltzmann Machines (RBMs) and Deep Boltzmann Machines (DBMs).

a) Restricted Boltzmann Machines (RBMs): RBMs have no connections between hidden units or visible units. They are trained using contrastive divergence or persistent contrastive divergence.

b) Deep Boltzmann Machines (DBMs): DBMs have connections between multiple layers of hidden units, enabling them to model more complex data distributions.

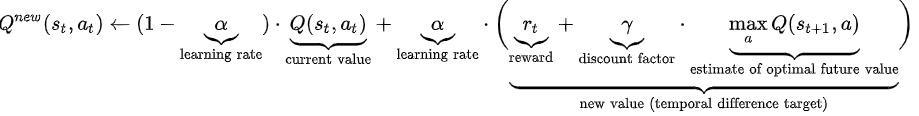

28) Discuss the concept of Q-learning in Reinforcement Learning.

Q-learning is a model-free reinforcement learning algorithm used to learn optimal policies for decision-making in an environment. It is based on the Bellman equation, which relates the value of a state-action pair to the value of the next state and the maximum expected future rewards.

Q-learning iteratively updates the Q-values (expected future rewards) for state-action pairs based on the observed rewards and transitions in the environment. The agent explores the environment by taking action and updates its Q-values accordingly. Over time, the Q-values converge to the optimal Q-values, representing the maximum expected cumulative reward for each state-action pair.

Q-learning is widely used in problems where an agent interacts with an unknown environment, such as game playing, robotic control, and autonomous vehicles.

29) How can you implement a collaborative filtering recommendation system?

Collaborative filtering is a recommendation system technique that uses the preferences of similar users to determine another user's preference. Two common approaches to implementing collaborative filtering are:

a) User-based collaborative filtering: It finds users with similar preferences to the target user and recommends items liked by those similar users. The similarity between users is measured using metrics like Pearson correlation or cosine similarity.

b) Item-based collaborative filtering: It finds items that are like the items liked by the target user and recommends those similar items. The similarity between items is also measured using metrics like Pearson correlation or cosine similarity.

30) Explain the use of Bayesian optimisation in tuning machine learning models.

Bayesian optimisation is a global optimisation technique used to find the optimal hyperparameters for ML models. Unlike traditional grid search or random search, Bayesian optimisation uses probabilistic models to estimate the objective function (usually a model's performance metric) and selects the next hyperparameters to evaluate based on this estimation.

The technique employs a surrogate model, often Gaussian Process Regression, to model the objective function and its uncertainty. Based on the surrogate model, an acquisition function, such as Expected Improvement or Upper Confidence Bound, is used to decide the next set of hyperparameters to evaluate.

Learn to replicate the human mind in machine! Try our Neural Networks with Deep Learning Training!

Situation-Based Machine Learning Questions

Situation-based Machine Learning job interview questions present real-world scenarios where ML expertise is required. Interviewers use these questions to evaluate candidates' problem-solving skills, adaptability, and critical thinking. Candidates must propose effective strategies to handle imbalanced data, troubleshoot model performance drops, resolve team conflicts, and design solutions for new datasets with missing values.

31) You have a highly imbalanced dataset; how do you handle it to build an accurate model?

Handling imbalanced datasets requires careful consideration to prevent the model from being biased towards the majority class. Some strategies to address this issue are:

a) sampling techniques: Use techniques like oversampling the minority class or undersampling the majority class to balance the class distribution. However, this may lead to loss of information or overfitting.

b) Synthetic data generation: Utilise techniques like the Synthetic Minority Over-sampling Technique (SMOTE) to generate synthetic samples of the minority class, maintaining the overall distribution.

c) Class weights: Assign higher weights to the minority class during model training, making it more significant in the learning process.

d) Evaluation metrics: Use appropriate evaluation metrics like precision, recall, F1-score, or Area Under the ROC Curve (AUC-ROC) to assess model performance, as accuracy may be misleading for imbalanced datasets.

32) Your model's performance drastically drops when deployed in the real world; what could be the reasons, and how do you rectify it?

Several factors could contribute to the drop in model performance during deployment:

a) Data distribution shift: If the real-world data differs significantly from the training data, the model may struggle to generalise effectively.

b) Feature drift: Changes in the feature distribution can impact on the model's predictions.

c) Overfitting: The model may have overfitted to the training data, leading to poor generalisation.

d) Lack of real-world constraints: During development, the model may not consider practical constraints or limitations present in the real-world scenario.

To rectify the performance drop:

a) Continuous monitoring: Implement a monitoring system to track the model's performance and identify issues in real-time.

b) Data quality assurance: Ensure the quality and relevance of data used for training and evaluation, and continuously update the training data.

c) Regular model retraining: Retrain the model periodically using updated data to maintain its accuracy and relevancy.

d) Model versioning: Maintain different versions of the model to revert to a previous version if issues arise.

e) A/B testing: Perform A/B testing with multiple versions of the model to identify the most effective one.

33) Describe a challenging project you worked on and the innovative approaches you used to overcome obstacles.

During a sentiment analysis project, the major challenge was the scarcity of labelled data for specific domains. To overcome this:

1) Data augmentation: We utilised data augmentation techniques to artificially increase the labelled data by introducing synonyms, paraphrases, and language variations.

2) Transfer learning: We employed transfer learning by fine-tuning pre-trained language models on general sentiment analysis datasets and then fine-tuning them further on the scarce domain-specific data.

3) Active learning: We used active learning to intelligently select data points from the unlabelled pool for manual annotation, maximising the model's learning with minimal human effort.

4) Domain adaptation: To tackle domain shift, we used domain adaptation techniques to align the feature distributions between the source (general) and target (domain-specific) datasets.

34) In a team-based ML project, there's a conflict in selecting the model; how do you convince your teammates about your choice?

Resolving conflicts in a team-based ML project requires effective communication and data-driven arguments:

1) Data analysis: Present a thorough analysis of the dataset, pointing out the characteristics that align with the strengths of your proposed model.

2) Literature review: Share research papers or articles supporting the effectiveness of your chosen model for similar tasks.

3) Demonstrations: Provide demonstrations or prototypes showcasing the model's performance and ease of implementation.

4) Performance metrics: Compare performance metrics of different models on validation data, demonstrating why your choice outperforms the alternatives.

5) Compromise: Be open to compromise and consider blending ideas from different team members to find a middle ground.

6) Transparent communication: Engage in transparent and respectful discussions. Honestly, admitting the pros and cons of each approach can aid a data scientist in achieving their goal more efficiently.

35) You're given a new dataset with missing values in various features; propose an effective strategy to handle the missing data and build a robust model.

To handle missing data and build a robust model:

1) Data imputation: Impute missing values using methods like mean, median, or mode imputation for numerical features or the most frequent category imputation for categorical features.

2) Advanced imputation techniques: Use advanced imputation methods like k-nearest neighbours (KNN) imputation or regression imputation when relationships between features exist.

3) Missing data indicators: Create binary indicators for missing values to preserve information about missingness and include them as additional features during model training.

4) Missingness mechanism analysis: Analyse the patterns of missingness to understand if data is missing completely at random or missing not at random. Handle each case accordingly.

5) Feature importance: Assess the importance of features with missing values. If a feature has high predictive power, consider using advanced imputation techniques or explore collecting more data for that feature.

6) Ensemble methods: Utilise ensemble methods that can handle missing data gracefully, like Random forest or Gradient boosting, which can accommodate missing values without imputation.

7) Sensitivity analysis: Conduct sensitivity analysis by comparing model performance with and without imputed data to evaluate the imputation's impact on the final model.

Conclusion

Mastering the Machine Learning Interview Questions presented throughout the blog will equip you with a solid understanding of ML concepts at various levels. From beginner to advanced, these questions provide valuable insights into the field's intricacies and real-world problem-solving. With this knowledge, candidates can confidently navigate interviews and stand out as skilled machine learning practitioners.

Try our Natural Language Processing (NLP) Fundamentals with Python course today!

Frequently Asked Questions

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Machine Learning Course

Machine Learning Course

Fri 4th Oct 2024

Fri 27th Dec 2024

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please