We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Data Analytics has become an integral part of various industries in the modern data-driven world. Companies are increasingly relying on Data Analytics professionals to gain valuable insights from their data and make informed business decisions. As the demand for data analysts grows, so does the importance of acing Data Analytics interviews. In this blog, we will provide 25+ Data Analytics Interview Questions and their answers, helping aspiring analysts prepare for job interviews with confidence.

Table of Contents

1) Preparing for a Data Analytics interview

2) Common Data Analytics Interview Questions

3) Technical Data Analytics Interview Questions

4) Statistical Data Analytics Interview Questions

5) Machine Learning Data Analytics Interview Questions

6) Behavioural Data Analytics Interview Questions

7) Conclusion

Preparing for a Data Analytics interview

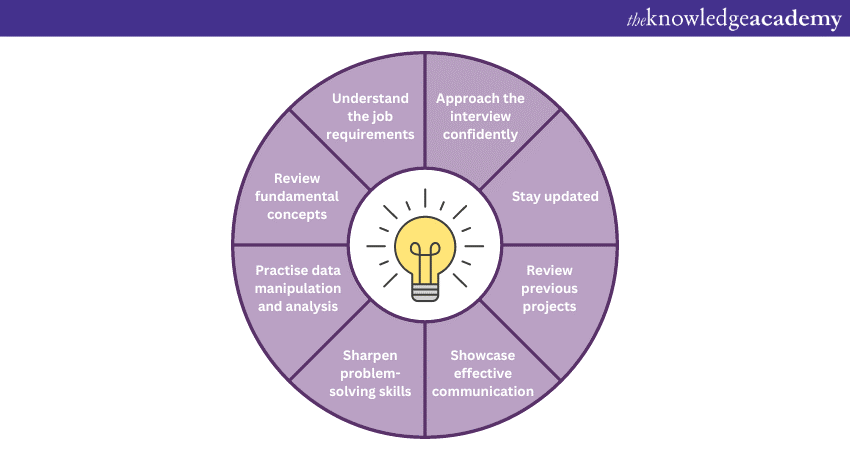

There are certain things you must know while preparing for a Data Analytics Interview. To prepare for a Data Analytics Interview to the fullest of your ability, it is important to adhere to the following tips:

1) Understand the job requirements: It is essential to understand the job requirements and research the company to align your skills with their needs.

2) Review fundamental concepts: Make sure to review fundamental concepts in statistics and data analysis to tackle technical questions.

3) Practise data manipulation and analysis: Make sure that you practise data manipulation and analysis using tools like Python, R, or SQL for hands-on experience.

4) Sharpen problem-solving skills: Better your ability to solve problems through critical thinking to handle complex data challenges.

5) Showcase effective communication: Showcase an effective communication of data insights using clear and concise presentations.

6) Review previous projects: Review previous Data Analytics projects and achievements to provide concrete examples of your expertise.

7) Stay updated: Keep yourself updated with industry trends and advancements to demonstrate your awareness of the field's dynamic nature.

8) Approach the interview confidently: Go into the interview with confidence and enthusiasm, expressing your passion for Data Analytics.

Supercharge your data skills with our Big Data and Analytics Training – register now!

Common Data Analytics Interview Questions

Data Analytics interviews are crucial for aspiring data analysts, as they allow employers to assess their technical skills, problem-solving abilities, and communication aptitude. To help you prepare for your upcoming interview, here are some common Data Analytics Interview Questions for freshers and their corresponding answers:

Q1. Explain the difference between Descriptive, Predictive, and Prescriptive Analytics.

Answer: Descriptive analytics focuses on summarising historical data to gain insights into past trends and patterns. Predictive analytics, on the other hand, uses historical data to make predictions about future outcomes. Lastly, Prescriptive analytics goes beyond predictions and suggests actions or solutions based on the analysis of past and current data.

Q2. How do you handle missing or incomplete data?

Answer: Dealing with missing data is essential to ensure accurate analyses. Common techniques include imputation, where missing values are replaced with estimated values based on existing data, and removing rows or columns with missing data if it does not significantly impact the overall analysis.

Q3. Discuss the importance of Data Visualisation.

Answer: Data Visualisation is vital as it allows analysts to present complex data in a clear and understandable manner. Visualisations help stakeholders grasp insights quickly, identify trends, and make data-driven decisions more effectively.

Q4. What programming languages are commonly used in Data Analytics?

Answer: Python and R are the most popular programming languages for Data Analytics due to their extensive libraries, ease of use, and versatility. SQL is also essential for data manipulation and querying databases.

Q5. How do you clean and preprocess data before analysis?

Answer: Data cleaning involves removing duplicates, handling missing values, and correcting errors. Preprocessing includes scaling, transforming, and encoding data to make it suitable for analysis and modelling.

Q6. Explain the concept of clustering and its applications.

Answer: Clustering is an unsupervised learning technique that groups similar data points together. It is used for customer segmentation, anomaly detection, and pattern recognition in various domains.

Q7. What is the difference between the types of supervised and unsupervised learning?

Answer: In supervised learning, the model is trained on labelled data, where the target variable is known, to make predictions. Unsupervised learning, on the contrary, involves training the model on unlabelled data to find patterns and relationships.

Q8. How do you perform A/B testing?

Answer: A/B testing is used to compare two versions of a web page or app by showing different versions to different users simultaneously. It helps determine which version performs better based on user behaviour and engagement metrics.

Want to unlock the power of Big Data Analysis? Join our Big Data Analysis Course today!

Technical Data Analytics Interview Questions

In a Data Analytics interview, technical questions play a crucial role in assessing a candidate's hands-on skills and problem-solving abilities. Let's explore some common technical questions asked during interviews along with their corresponding answers:

Q9. How do you clean and preprocess data before analysis?

Answer: Data cleaning and preprocessing are essential steps in the analytics process. To clean the data, you first identify and handle missing values, duplicates, and outliers. Then, you will convert data types, standardise units, and handle any inconsistencies. For preprocessing, you can also apply techniques such as normalisation, scaling, or feature engineering to ensure the data is suitable for analysis.

Q10. What is hypothesis testing, and how is it used?

Answer: Hypothesis testing is a statistical method used to make inferences on a population based on a sample of data. It is often used to determine if there is a significant relationship between variables or if observed differences are due to chance. By setting up null and alternative hypotheses, we can use p-values to accept or reject the null hypothesis.

Q11. How do you interpret p-values and confidence intervals?

Answer: The p-value measures the probability of observing the data or more extreme results under the assumption that the null hypothesis is true. A low p-value (typically less than 0.05) suggests that the data provides strong evidence against the null hypothesis, and you can reject it in favour of the alternative hypothesis. Confidence intervals provide a range of values within which we expect the true population parameter to lie with a certain level of confidence.

Q12. Explain the Central Limit Theorem as well as its significance.

Answer: The Central Limit Theorem states that irrespective of the shape of the population distribution, the sampling distribution of the mean of sufficiently large samples will be approximately normally distributed. This theorem is essential because it allows you to make statistical inferences about a population based on sample means.

Q13. Discuss the concept of correlation vs. causation.

Answer: Correlation measures the degree of association between two variables, but it does not imply causation. Two variables can be strongly correlated, but it doesn't mean that one causes the other. To establish causation, additional research, experimentation, and a solid understanding of the underlying mechanisms are required.

Statistical Data Analytics Interview Questions

This section of the blog will expand on some Statistical Data Analytics Interview Questions as well as the answers you will be expected to give.

Q14. Define overfitting and how to prevent it in Machine Learning models.

Answer: Overfitting occurs when a Machine Learning model performs well on the training data – however, fails to generalise to unseen or new data. It happens when the model learns noise and irrelevant patterns in the training data, leading to poor performance on new data. To prevent overfitting, data analysts can employ several techniques:

a) Cross-validation: Splitting the data into multiple subsets to train and validate the model iteratively.

b) Regularisation: Introducing penalty terms in the model to discourage overly complex patterns.

c) Feature selection: Selecting relevant features and removing unnecessary ones to reduce model complexity.

d) Ensemble methods: Combining multiple models to improve generalisation performance.

Q15. How do you handle outliers in data analysis?

Answer: Outliers are data points that deviate significantly from the rest of the data. Handling outliers is crucial to ensure they do not unduly influence the analysis. There are various approaches to deal with outliers:

a) Removing outliers: In some cases, outliers can be removed from the dataset if they are genuine errors or anomalies. However, this should be done judiciously and with a clear understanding of the data.

b) Transformations: Applying mathematical transformations like logarithm or square root can help mitigate the impact of outliers on the data.

c) Imputation: For certain models, imputing outliers with values derived from the rest of the data can be a suitable approach.

Q16. What is the difference between parametric and non-parametric statistical tests?

Answer: Parametric and non-parametric tests are two broad categories of statistical tests:

a) Parametric tests: Parametric tests assume that the data follows a specific distribution, typically the normal distribution. They require certain assumptions about the data, such as constant variance and independence. Examples of parametric tests include t-tests and ANOVA.

b) Non-parametric tests: Non-parametric tests make fewer assumptions about the data distribution and are more versatile when the data does not meet the parametric tests’ assumptions. Examples of non-parametric tests include the Mann-Whitney U test and the Wilcoxon signed-rank test.

Q17. What is the p-value, and how do you use it to make decisions in hypothesis testing?

Answer: The p-value, short for "probability value," is a measure of the evidence against the null hypothesis in hypothesis testing. It represents the probability of obtaining results as extreme or more extreme than the observed results, assuming the null hypothesis is true.

In hypothesis testing, if the p-value is smaller than the predetermined significance level (usually 0.05), the null hypothesis is rejected in favour of the alternative hypothesis. A larger p-value suggests weaker evidence against the null hypothesis, leading to its acceptance.

Q18. Explain the concept of a normal distribution and its importance in data analysis.

Answer: A normal distribution is a symmetric probability distribution characterised by a bell-shaped curve. In a normal distribution, it must be kept in mind that the mean, median, and mode are all equal, and the distribution is fully defined by its mean and standard deviation.

Normal distributions are crucial in data analysis because many statistical methods, including parametric tests, assume that the data is normally distributed. Additionally, normal distributions allow analysts to make probabilistic inferences and estimate the likelihood of events occurring within certain ranges.

Want to take your Data Science skills to the next level? Join our Big Data Analytics & Data Science Integration Course now!

Machine Learning Data Analytics Interview Questions

This section of the blog will expand on some Data Analytics Interview Questions on Machine Learning as well as the answers you will be expected to give.

Q19. What are the main evaluation metrics for regression and classification models?

Answer: Evaluation metrics assess the performance of Machine Learning models. For regression models, common evaluation metrics include:

a) Mean Squared Error (MSE): Calculates the average squared difference between the predicted values and the actual target values.

b) Root Mean Squared Error (RMSE): The square root of MSE, providing a measure in the same unit as the target variable.

c) Mean Absolute Error (MAE): Measures the average absolute difference between the predicted and actual values.

For classification models, essential evaluation metrics include:

a) Accuracy: Measures the overall correctness of predictions.

b) Precision: Focuses on the ratio of true positive predictions to all positive predictions, indicating the model's ability to avoid false positives.

c) Recall (Sensitivity): Represents the ratio of true positive predictions to all actual positive instances, measuring the model's ability to identify positives.

d) F1 score: Known as the harmonic mean of precision and recall, it offers a balanced measure between the two metrics.

Q20. Explain the working of decision trees in Machine Learning.

Answer: Decision trees are a popular supervised learning algorithm that is often used for both classification and regression tasks. The working of decision trees involves the following steps:

a) Feature selection: The algorithm evaluates different features and selects the most informative one to split the data into two or more subsets.

b) Splitting: The data is split based on the selected feature, creating branches for each subset.

c) Recursive process: The above steps are recursively applied to each subset until a stopping criterion is met, such as reaching a maximum depth or minimum number of samples per leaf.

d) Leaf nodes: The process continues until all subsets are pure (contain data points of the same class) or until another stopping criterion is met.

The final decision tree forms a hierarchical structure of nodes and branches, allowing it to make predictions based on the input features.

Q21. Discuss the difference between bagging and boosting algorithms.

Answer: Bagging (Bootstrap Aggregating) and Boosting are ensemble learning techniques that aim to improve model performance. In bagging, multiple base models (e.g., decision trees) are trained independently on random subsets of the training data. The final prediction is obtained by averaging (for regression) or voting (for classification) the predictions of individual models. Bagging reduces variance and helps prevent overfitting.

Boosting, on the other hand, focuses on building a strong model by iteratively training weak models. Each model corrects the errors of its predecessor, with more emphasis on misclassified data points. Boosting leads to higher model accuracy, but it may be more prone to overfitting if not carefully tuned.

Q22. Define the role of Hadoop in processing Big Data.

Answer: Hadoop is an open-source distributed computing framework that plays a vital role in processing and analysing massive amounts of Big Data. It consists of two primary components:

a) Hadoop Distributed File System (HDFS): HDFS stores data across multiple nodes in a distributed manner, enabling high scalability and fault tolerance. Data is split into blocks and replicated across nodes for redundancy.

b) MapReduce: MapReduce is a programming model used for parallel processing of data across the Hadoop cluster. It processes data in two phases: the "Map" phase, where data is divided into key-value pairs, and the "Reduce" phase, where the results are aggregated and combined to produce the final output.

Hadoop's ability to process vast volumes of data efficiently makes it an essential tool in various industries dealing with Big Data challenges.

Unlock your data prowess with our Advanced Data Analytics Certification Training - join today!

Behavioural Data Analytics Interview Questions

Let's explore some common behavioural Data Analytics Interview Questions and the appropriate answers that hiring managers seek:

Q23. Describe a challenging project that you previously worked on and how you overcame obstacles.

Answer: You are supposed to answer this with “During my previous role, you were tasked with analysing customer churn data for a telecom company. The data was extensive and messy, making it difficult to derive meaningful insights. To overcome this challenge, you first collaborated with the data engineering team to ensure data cleaning and standardisation.”

You should continue: “Then, you adopted a systematic approach, breaking down the problem into manageable segments. By using advanced data visualisation techniques, you identified patterns and underlying reasons for customer churn, which helped the company implement targeted retention strategies successfully.”

Q24. How do you manage time and prioritise tasks in a project?

Answer: You can approach this question like “In a project, time management is crucial to meet deadlines and deliver quality results. You start by understanding the project requirements and breaking it down into smaller tasks. You assign priority levels based on the impact each task has on the overall project. Additionally, you set realistic timelines for completion and allocate adequate time for data exploration, analysis, and validation. Regularly reassessing the progress helps me stay on track and make adjustments if needed.”

Q25. Discuss a situation where you had to present complex data analysis to non-technical stakeholders.

Answer: To answer this question, you can say: “In one of your projects, you had to present a comprehensive market analysis to the company's senior management, who had limited data expertise. To ensure effective communication, you prepared a visually appealing and easy-to-understand presentation. You avoided technical jargon and focused on highlighting the key findings and actionable insights.”

Make sure to add: “By using data visualisations, such as charts and graphs, you presented the information in a clear and concise manner, enabling the stakeholders to make well-informed decisions.”

Q26. How do you handle conflicting priorities and tight deadlines in a project?

Answer: When faced with this question, you can answer like “When faced with conflicting priorities and tight deadlines, you first assess the urgency and importance of each task. You communicate with relevant stakeholders to set realistic expectations and negotiate deadlines if necessary.”

Continue: “Next, you prioritise critical tasks and allocate time accordingly. Throughout the project, you maintain open communication channels with team members to address any challenges promptly. By staying organised and adaptable, you ensure that all essential tasks are completed on time without compromising on quality.”

Q27. Describe a situation where you had to collaborate with colleagues from different departments for a project.

Answer: For this question, you can answer along the lines of “In a previous project, you collaborated with the marketing and sales teams to analyse customer segmentation data. To ensure a successful collaboration, you scheduled regular meetings to understand their specific requirements and align the analysis accordingly.”

You will be expected to continue: “You also provided them with regular updates on the progress and sought their feedback to ensure the analysis met their needs. By fostering a collaborative environment and valuing inputs from all stakeholders, you and your team was able to achieve a total understanding of customer segments, leading to more targeted marketing strategies."

Conclusion

Becoming a proficient data analyst requires not only technical expertise but also the ability to effectively communicate complex data insights. By thoroughly preparing for Data Analytics interviews and familiarising oneself with the most asked Data Analytics Interview Questions and answers, aspiring data analysts can display their skills and knowledge to potential employers confidently.

Unlock the power of data with our comprehensive Data Science & Analytics Training. Sign up now!

Frequently Asked Questions

Upcoming Data, Analytics & AI Resources Batches & Dates

Date

Hadoop Big Data Certification

Hadoop Big Data Certification

Thu 12th Sep 2024

Thu 12th Dec 2024

Top Rated Course

Top Rated Course

If you wish to make any changes to your course, please

If you wish to make any changes to your course, please